It's nearly Christmas (again!) and I hope you're way too busy enjoying the best this season has to offer to be reading this! With any luck, the systems and people you look after will all be humming along just fine, leaving you able to spend plenty of time with family and friends.

At times like this, it can be easy to forget that there are a lot of people out there who don't have it as sweet as we do. Where running water is a luxury, we'd find it difficult getting sympathy for our lengthy outstanding bugs list or early morning release woes!

I'm no Bono, but I like to contribute somehow whenever I come across a cause I believe in, but doing something specifically at this time of year has the extra bonus of reminding you of exactly how lucky you are during what can be a time of excesses.

Now, this blog has always been about the free exchange of ideas - a place where I can capture my original thoughts and experiences from the work I do, in the hope that it will help others with the work that they do. Whatever else it is, it's never been a money making exercise - and that's why I've never before tried to monetize the content, despite a growing readership (hello to both of you).

However, as of today, keener-eyed observers will notice a Google Ads box lurking surreptitiously in the right hand panel, ready to corrupt my benevolent posts with it's raw, unbridled, marketing vivacity. But don't worry - I haven't sold out yet! Here is the plan...

I'll run Google AdSense for the coming year, and around this time next year, donate all the revenue to charity. Every cent, and a moderate top up from myself.

It is impractical to pick a charity 12 months ahead, but the money will be donated via Global Giving or Just Giving (or maybe both) so that you can keep me honest. I'm thinking about supporting something which helps to establish education in 3rd world countries - that appeals to my "help those who are prepared to help themselves" philosophy - but will take suggestions from the community.

As well as cheques being posted next December, you can expect a running total, say, quarterly - and heck, if things go really well maybe we'll have a mid-year checkpoint and make a donation then too.

So, Merry Christmas and all the best for 2009, and remember, if you see something that interests you on the right hand side then give it a click - it's for a good cause!

See you next year

Eachan

The things I should have learned from years of putting together people and technology to get successful product online. We’ll probably talk about strategy, distributed systems, agile development, webscale computing and of course how to manage those most complicated of all machines – the human being – in our quest to expose the most business value in the least expensive way.

Monday 22 December 2008

Sunday 21 December 2008

When is the right time to launch?

Ignoring commercial concerns such as marketing campaigns, complimentary events, and market conditions, this essentially comes down to weighing the quality/completeness of the product against getting to market sooner.

The quality over time to market side has been advocated a few times in recent episodes of Paul Boag's podcast, so I figured I would speak up for the other side. But before I do, let me just say that I don't think there is a right answer to this and, as Paul also conceded, it depends on if your application's success requires a land grab or not.

I am, by nature, an "early and often" man - and that's kind of a vote for time to market over quality. I say kind of because I think it would be more accurate to say that it's a vote for time to market over perfection.

For me, the "often" part is inextricable linked to the "early" part. If you can show a pattern of frequent, regular improvement and feature releases, then you can afford to ship with less on day one. Users can often be more forgiving when see things turned around quickly, and new things regularly appearing can even become a reason in itself to return to the site more often.

Quality is still a factor, but it isn't as black and white as I've been hearing it presented. In the early days of a new product, I think where you spend your time is more important than overall quality. You should be very confident in anything that deals with accounts, real money, or the custody of user's data before shipping. I would argue that getting those areas right for launch at the expense of other parts of the system is better than a more even systemwide standard of quality. You always have limited resources. Spend the most where it counts the most.

And finally, openness. Be truthful and transparent with your users. Start a blog about development progress and the issues you've run into. Provide feedback mechanisms, and actually get back to people who've taken the time to share their thoughts - with something material too, not just an automated thankyou. Send out proactive notifications ahead of impactful changes and after unplanned events. Stick 'beta' labels on things you're shipping early - it'll keep user's blood pressure down, and you might be surprised by how much of the community is prepared to help.

I am aware that I haven't actually answered the question that lends this post its title. I don't know if there even is an off-the-shelf answer, but I hope that I've at least given you some more ideas on how to make the right decision for yourself.

The quality over time to market side has been advocated a few times in recent episodes of Paul Boag's podcast, so I figured I would speak up for the other side. But before I do, let me just say that I don't think there is a right answer to this and, as Paul also conceded, it depends on if your application's success requires a land grab or not.

I am, by nature, an "early and often" man - and that's kind of a vote for time to market over quality. I say kind of because I think it would be more accurate to say that it's a vote for time to market over perfection.

For me, the "often" part is inextricable linked to the "early" part. If you can show a pattern of frequent, regular improvement and feature releases, then you can afford to ship with less on day one. Users can often be more forgiving when see things turned around quickly, and new things regularly appearing can even become a reason in itself to return to the site more often.

Quality is still a factor, but it isn't as black and white as I've been hearing it presented. In the early days of a new product, I think where you spend your time is more important than overall quality. You should be very confident in anything that deals with accounts, real money, or the custody of user's data before shipping. I would argue that getting those areas right for launch at the expense of other parts of the system is better than a more even systemwide standard of quality. You always have limited resources. Spend the most where it counts the most.

And finally, openness. Be truthful and transparent with your users. Start a blog about development progress and the issues you've run into. Provide feedback mechanisms, and actually get back to people who've taken the time to share their thoughts - with something material too, not just an automated thankyou. Send out proactive notifications ahead of impactful changes and after unplanned events. Stick 'beta' labels on things you're shipping early - it'll keep user's blood pressure down, and you might be surprised by how much of the community is prepared to help.

I am aware that I haven't actually answered the question that lends this post its title. I don't know if there even is an off-the-shelf answer, but I hope that I've at least given you some more ideas on how to make the right decision for yourself.

Friday 19 December 2008

Constraints and Creativity

Does the greatest creativity come from unlimited choice; a totally blank canvas with every possible option and no restrictions, or from difficult circumstances; where pressure is high, the problems are acute and all the easy avenues are closed?

History has proven necessity to be the mother of invention time and time again, and I've personally seen a lot of otherwise-excellent people become paralyzed when faced with too much opportunity.

The current economic circumstances are bringing this into somewhat sharp relief. From time to time I get approached by other entrepreneurs with an idea they want to develop - usually only a couple of times a year, but in the last couple of quarters I've seen half a dozen. There is something about this credit crunch...

Conditions are right for the sorts of things I've seen; internet based, self-service, person to person applications that look for efficiency by cutting out middlemen and using smarter fulfillment. They're all great ideas and I really wish I had the time to help them all out.

Returning to the point, would you get this kind thinking in times of plenty? Who knows, maybe something good will come out of this credit crunch after all.

History has proven necessity to be the mother of invention time and time again, and I've personally seen a lot of otherwise-excellent people become paralyzed when faced with too much opportunity.

The current economic circumstances are bringing this into somewhat sharp relief. From time to time I get approached by other entrepreneurs with an idea they want to develop - usually only a couple of times a year, but in the last couple of quarters I've seen half a dozen. There is something about this credit crunch...

Conditions are right for the sorts of things I've seen; internet based, self-service, person to person applications that look for efficiency by cutting out middlemen and using smarter fulfillment. They're all great ideas and I really wish I had the time to help them all out.

Returning to the point, would you get this kind thinking in times of plenty? Who knows, maybe something good will come out of this credit crunch after all.

Wednesday 17 December 2008

Tradeoffs - You're Doing It Wrong

Our time is always our scarcest resource, and most of the web companies I know are perpetually bristling with more ideas than they could ever manage to ship. We always wish we could do them all, but at the same time we know deep down that it isn't realistic. Under these circumstances, tradeoffs are an inevitable and perfectly normal part of life.

The secret to doing this well is in what things you weigh against each other when deciding how to spend the resources you have.

It's so easy to start down the slippery slope of trading features off against the underlying technologies that support them. Fall into this trap and you'll get a small surge of customer-facing things out quickly, but stay with the pattern (easy to do - as it does reward you well in the short term) and you'll soon find yourself paralyzed by technical debt; struggling against continuously increasing time to market and building on top of a shaky, unreliable platform.

Instead, try trading off a feature and it's supporting technology against another feature and it's supporting technology. This isn't a carte blanche ticket for building hopelessly over-provisioned systems - it's simply about setting yourself up in the most appropriate way for future requirements and scalability, rather than foregoing whatever platform work that would have been diligent and starting off behind the curve.

Trading features off against their underlying technology is not just short sighted, it's also unrealistic. You see, the fact is that all customer-facing features have to be maintained and supported anyway, no matter how badly we wish we could spend the time on other things. And right up front, when we first build each feature, we have the most control we're ever going to have over how difficult and expensive that's going to be for us.

This is a philosophy that has to make it all the way back to the start of the decision making process. When considering options, knowing the true cost of ownership is as vital as the revenue opportunity and the launch cost. Remember - you build something once, but you maintain it for life.

The secret to doing this well is in what things you weigh against each other when deciding how to spend the resources you have.

It's so easy to start down the slippery slope of trading features off against the underlying technologies that support them. Fall into this trap and you'll get a small surge of customer-facing things out quickly, but stay with the pattern (easy to do - as it does reward you well in the short term) and you'll soon find yourself paralyzed by technical debt; struggling against continuously increasing time to market and building on top of a shaky, unreliable platform.

Instead, try trading off a feature and it's supporting technology against another feature and it's supporting technology. This isn't a carte blanche ticket for building hopelessly over-provisioned systems - it's simply about setting yourself up in the most appropriate way for future requirements and scalability, rather than foregoing whatever platform work that would have been diligent and starting off behind the curve.

Trading features off against their underlying technology is not just short sighted, it's also unrealistic. You see, the fact is that all customer-facing features have to be maintained and supported anyway, no matter how badly we wish we could spend the time on other things. And right up front, when we first build each feature, we have the most control we're ever going to have over how difficult and expensive that's going to be for us.

This is a philosophy that has to make it all the way back to the start of the decision making process. When considering options, knowing the true cost of ownership is as vital as the revenue opportunity and the launch cost. Remember - you build something once, but you maintain it for life.

Saturday 13 December 2008

Failure Modes

Whenever we're building a product, we've got to keep in mind what might go wrong, rather than just catering mindlessly to the functional spec. That's because specifications are largely written for a parallel universe where everything goes as planned and nothing ever breaks; while we must write software that runs right here in this universe, with all it's unpredictability, unintended consequences, and poorly behaved users.

Oh and as I've said in the past, if you have a business owner that actually specs for failure modes, kiss them passionately now and never let them go. But for the rest of us, maybe it would help us keep failure modes in the forefront of our minds as we worked if we came up with some simplified categories to keep track of. How about internal, external, and human?

Internal

I'm not going to say too much about internal failure modes, because they are both the most commonly considered types and they have the most existing solutions out there.

You could sum up internal failures by imagining your code operating autonomously in a closed environment. What might go wrong? You are essentially catering for quality here, and we have all sorts of test environments and unit tests to combat defects we might accidentally introduce through our own artifacts.

External

The key difference between external and internal failure modes is precisely what I said above - you are imagining that your code is operating in perfect isolation. If you are reading this, then I sincerely hope you rolled your eyes at that thought.

Let's assume that integration is part of internal, and we only start talking external forces when our product is out there running online. What might go wrong?

Occasionally I meet teams that are pretty good detecting and reacting to external failures and it pleases me greatly. Let's consider some examples; what if an external price list that your system refers to goes down? How about if a service intended to validate addresses becomes a black hole? What if you lose your entire internet connection?

Those examples are all about blackouts - total and obvious removal of service - so things are conspicuous by their absence. For bonus points, how are you at spotting brownouts? That's when things are 'up' but still broken in a very critical way, and the results can sometimes cost you far more than a blackout, as they can go undetected for a while...

Easy example - you subscribe to a feed for up-to-the-minute foreign exchange rates. For performance reasons, you probably store the most recent values for each currency you use in a cache or database, and read it from there per transaction. What happens if you stop receiving the feed? You could keep transacting for a very long time before you notice, and you will have either disadvantaged yourself or your customers by using out of date rates - neither of which is desirable.

Perhaps the feed didn't even stop. Perhaps the schema changed, in which case you'd still see a regular drop of data if you were monitoring the consuming interface, but you'd have unusable data - or worse - be inserting the wrong values against each currency.

Human

Human failure modes are the least catered for in our profession, regardless of the fact they're just as inevitable and just as expensive. You could argue that 'human' is just another type of external failure, but I consider it fundamentally different due to one simple word - "oops".

To err is human and all that junk. We do stuff like set parameters incorrectly, turn off the wrong server, pull out the wrong disk, plug in the wrong cable, ignore system requirements etc - all with the best of intentions.

So what would happen if, say, a live application server is misconfigured to use a development database and then you unknowingly unleash real users upon it? You could spend a very long time troubleshooting it, or worse still it might actually work - and thinking about brownouts - how long will it be before you noticed? For users who'd attached to that node, where will all their changes be, and how will you merge that back into the 'real' live data?

Humans can also accidentally not do things which have consequences for our system too. Consider our feed example - perhaps we just forgot to renew the subscription, and so we're getting stale or no data even though the system has done everything it was designed to do. Hang on, who was in charge of updating those SSL certificates?

Perhaps we don't think about maintenance mistakes up front because whenever we build something, we always picture ourselves performing the operational tasks. And to us, the steps are obvious and we're performing them, in a simplified world in our heads, without any other distractions competing for our attention. Again - not real life.

And so...

All of these things can be monitored, tested for, and caught. In our forex example, you might check the age of the data every time you read the exchange rate value in preparation for a transaction, and fail it if it exceeds a certain threshold (or just watch the age in a separate process).

In our live server with test data example, you might mandate that systems and data sources state what mode they're in (test, live, demo, etc) in their connection string - better yet generate an alert if there is a state mismatch in the stack (or segment your network so communication is not possible).

The question isn't are there solutions; the question is how far is far enough?

As long as you think about failure modes in whatever way works for you, and make a pragmatic judgement on each risk using likelihood and impact to determine how many monitors and fail-safes it's worth building in, then you'll have done your job significantly better than the vast majority of engineers out there - and your customers will thank you for it with their business.

Oh and as I've said in the past, if you have a business owner that actually specs for failure modes, kiss them passionately now and never let them go. But for the rest of us, maybe it would help us keep failure modes in the forefront of our minds as we worked if we came up with some simplified categories to keep track of. How about internal, external, and human?

Internal

I'm not going to say too much about internal failure modes, because they are both the most commonly considered types and they have the most existing solutions out there.

You could sum up internal failures by imagining your code operating autonomously in a closed environment. What might go wrong? You are essentially catering for quality here, and we have all sorts of test environments and unit tests to combat defects we might accidentally introduce through our own artifacts.

External

The key difference between external and internal failure modes is precisely what I said above - you are imagining that your code is operating in perfect isolation. If you are reading this, then I sincerely hope you rolled your eyes at that thought.

Let's assume that integration is part of internal, and we only start talking external forces when our product is out there running online. What might go wrong?

Occasionally I meet teams that are pretty good detecting and reacting to external failures and it pleases me greatly. Let's consider some examples; what if an external price list that your system refers to goes down? How about if a service intended to validate addresses becomes a black hole? What if you lose your entire internet connection?

Those examples are all about blackouts - total and obvious removal of service - so things are conspicuous by their absence. For bonus points, how are you at spotting brownouts? That's when things are 'up' but still broken in a very critical way, and the results can sometimes cost you far more than a blackout, as they can go undetected for a while...

Easy example - you subscribe to a feed for up-to-the-minute foreign exchange rates. For performance reasons, you probably store the most recent values for each currency you use in a cache or database, and read it from there per transaction. What happens if you stop receiving the feed? You could keep transacting for a very long time before you notice, and you will have either disadvantaged yourself or your customers by using out of date rates - neither of which is desirable.

Perhaps the feed didn't even stop. Perhaps the schema changed, in which case you'd still see a regular drop of data if you were monitoring the consuming interface, but you'd have unusable data - or worse - be inserting the wrong values against each currency.

Human

Human failure modes are the least catered for in our profession, regardless of the fact they're just as inevitable and just as expensive. You could argue that 'human' is just another type of external failure, but I consider it fundamentally different due to one simple word - "oops".

To err is human and all that junk. We do stuff like set parameters incorrectly, turn off the wrong server, pull out the wrong disk, plug in the wrong cable, ignore system requirements etc - all with the best of intentions.

So what would happen if, say, a live application server is misconfigured to use a development database and then you unknowingly unleash real users upon it? You could spend a very long time troubleshooting it, or worse still it might actually work - and thinking about brownouts - how long will it be before you noticed? For users who'd attached to that node, where will all their changes be, and how will you merge that back into the 'real' live data?

Humans can also accidentally not do things which have consequences for our system too. Consider our feed example - perhaps we just forgot to renew the subscription, and so we're getting stale or no data even though the system has done everything it was designed to do. Hang on, who was in charge of updating those SSL certificates?

Perhaps we don't think about maintenance mistakes up front because whenever we build something, we always picture ourselves performing the operational tasks. And to us, the steps are obvious and we're performing them, in a simplified world in our heads, without any other distractions competing for our attention. Again - not real life.

And so...

All of these things can be monitored, tested for, and caught. In our forex example, you might check the age of the data every time you read the exchange rate value in preparation for a transaction, and fail it if it exceeds a certain threshold (or just watch the age in a separate process).

In our live server with test data example, you might mandate that systems and data sources state what mode they're in (test, live, demo, etc) in their connection string - better yet generate an alert if there is a state mismatch in the stack (or segment your network so communication is not possible).

The question isn't are there solutions; the question is how far is far enough?

As long as you think about failure modes in whatever way works for you, and make a pragmatic judgement on each risk using likelihood and impact to determine how many monitors and fail-safes it's worth building in, then you'll have done your job significantly better than the vast majority of engineers out there - and your customers will thank you for it with their business.

Tuesday 2 December 2008

Speed is in the Eye of the Beholder

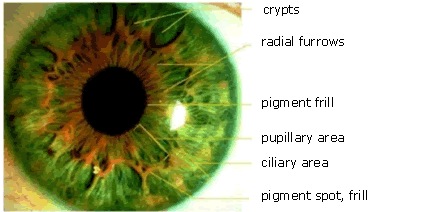

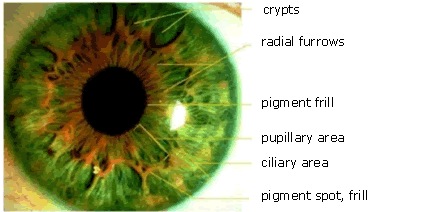

Yesterday I was coming through Heathrow again, and while I was waiting for my luggage to surprise me by actually turning up, I was ruminating on performance, user experience, and the iris scanner (old BBC link). For those who haven't had the pleasure, we're talking about a biometric border security system based on the unique patterns in an individual's eye, implemented at certain UK borders as an optional alternative to traverse passport security in a self-service way.

Flying internationally at least once a month for the last couple of years, I've been a regular user of the iris scanner since it's early trials. Like all new technology, it went through a period of unreliability and rapid change in its early days, but its been pretty good for a while now. Step into booth. Have picture of eyes taken. Enter the UK. Awesome.

The only problem is that...

It...

Is...

Just...

Too...

Slow...

Don't get me wrong - you don't have to queue up for it (yet) and you do spend less time overall at the border - it's just that, for a high tech biometric solution, it seems to take an awful long time to look me up once it snaps what it considers to be a usable image of my retinas. You know, in real time it isn't even that expensive - probably an average of about 5 or 6 seconds - but it's just long enough for you to want to keep moving, hesitate, grumble to yourself, briefly wonder if it's broken, and then the gates open up.

It occurred to me that maybe my expectations as a user were perhaps unfair, but then quick as a flash I realized - hey, I'm the user here, so don't I set the standard?

So that's when I started to think about how the performance of this system could be improved, and caught myself falling straight into rookie trap number 1:

I started making assumptions about the implementation and thinking about what solutions could be put in place. I figured there must be some processing time incurred when the retinal image is captured as the machine picks out the patterns in my eyes. That's a local CPU power constraint. Once my retinal patterns are converted into a machine-readable representation, there must be some kind of DB lookup to find a match. Once I've been uniquely identified, I imagine there will be some other data sources to be consulted - checking against the various lists we use to keep terrorists and whatnot at bay.

Well this all sounds very read intensive, so it's a good case for having lots of local replicas (the iris machines are spread out over a number of terminals). Each unique 'eye print' is rarely going to come through more often than days or weeks apart, so most forms of request caching won't help us much with that. Of course there is also write load - we've got to keep track of who crossed the border when and what the result was - but we can delay those without much penalty, as it is the reads that keep us standing in that booth. Maybe we could even periodically import our border security lists to a local service if we observe a significant network cost in checking against them for each individual scanned through the gate (assuming they are currently maintained remotely by other agencies).

Ignoring the fact that I'm making all sorts of horrible guesses about how this system currently works, these seem like reasonably sensible patterns to me, so what's the rookie trap?

Simply that I didn't start by understanding the basic problem I am trying to solve, instead rolling up my sleeves and diving straight into the technical complexities. In doing so, I might have overlooked a plain, simple solution and that's usually bad - why solve a problem in a complex, expensive way when a simple, cheap way will do just as well?

In this example, the problem is not that 5 seconds is an unacceptable time to process the image, compare the data against the registered users, and then pass all the additional border security checks - the problem is that 5 seconds feels like a long time when you're standing in a glass box waiting for it all to happen!

So what else might we have done instead of a major re-architecture of the iris back end? How about just lengthening the booth to form slightly more of a corridor, with the scanner at one end and the gates at the other? With this simple trick, it still takes 5 seconds before the gates can open, but it doesn't feel like it - you haven't been standing still waiting, there is a sensation of progress.

Same level of system performance, very different user experience.

General engineering lesson 1 - customer experience is king. Monitoring and other data driven metrics are important, but how it really looks and feels to your users matters way more than whatever you can prove with data. They'll judge you by their own experiences of your system, not by your reports.

General engineering lesson 2 - you don't have to solve every problem. Sometimes it's better/cheaper/faster to neutralize or work around the issue instead. You might not be able to build a different floor layout, but you can do things like transfer data in the background (not locking out the UI) and show progress 'holding' pages during long searches etc.

Oh and just for the record, I really have no idea how the back end of the iris system works - you are supposed to be seeing the analogy here...

Flying internationally at least once a month for the last couple of years, I've been a regular user of the iris scanner since it's early trials. Like all new technology, it went through a period of unreliability and rapid change in its early days, but its been pretty good for a while now. Step into booth. Have picture of eyes taken. Enter the UK. Awesome.

The only problem is that...

It...

Is...

Just...

Too...

Slow...

Don't get me wrong - you don't have to queue up for it (yet) and you do spend less time overall at the border - it's just that, for a high tech biometric solution, it seems to take an awful long time to look me up once it snaps what it considers to be a usable image of my retinas. You know, in real time it isn't even that expensive - probably an average of about 5 or 6 seconds - but it's just long enough for you to want to keep moving, hesitate, grumble to yourself, briefly wonder if it's broken, and then the gates open up.

It occurred to me that maybe my expectations as a user were perhaps unfair, but then quick as a flash I realized - hey, I'm the user here, so don't I set the standard?

So that's when I started to think about how the performance of this system could be improved, and caught myself falling straight into rookie trap number 1:

I started making assumptions about the implementation and thinking about what solutions could be put in place. I figured there must be some processing time incurred when the retinal image is captured as the machine picks out the patterns in my eyes. That's a local CPU power constraint. Once my retinal patterns are converted into a machine-readable representation, there must be some kind of DB lookup to find a match. Once I've been uniquely identified, I imagine there will be some other data sources to be consulted - checking against the various lists we use to keep terrorists and whatnot at bay.

Well this all sounds very read intensive, so it's a good case for having lots of local replicas (the iris machines are spread out over a number of terminals). Each unique 'eye print' is rarely going to come through more often than days or weeks apart, so most forms of request caching won't help us much with that. Of course there is also write load - we've got to keep track of who crossed the border when and what the result was - but we can delay those without much penalty, as it is the reads that keep us standing in that booth. Maybe we could even periodically import our border security lists to a local service if we observe a significant network cost in checking against them for each individual scanned through the gate (assuming they are currently maintained remotely by other agencies).

Ignoring the fact that I'm making all sorts of horrible guesses about how this system currently works, these seem like reasonably sensible patterns to me, so what's the rookie trap?

Simply that I didn't start by understanding the basic problem I am trying to solve, instead rolling up my sleeves and diving straight into the technical complexities. In doing so, I might have overlooked a plain, simple solution and that's usually bad - why solve a problem in a complex, expensive way when a simple, cheap way will do just as well?

In this example, the problem is not that 5 seconds is an unacceptable time to process the image, compare the data against the registered users, and then pass all the additional border security checks - the problem is that 5 seconds feels like a long time when you're standing in a glass box waiting for it all to happen!

So what else might we have done instead of a major re-architecture of the iris back end? How about just lengthening the booth to form slightly more of a corridor, with the scanner at one end and the gates at the other? With this simple trick, it still takes 5 seconds before the gates can open, but it doesn't feel like it - you haven't been standing still waiting, there is a sensation of progress.

Same level of system performance, very different user experience.

General engineering lesson 1 - customer experience is king. Monitoring and other data driven metrics are important, but how it really looks and feels to your users matters way more than whatever you can prove with data. They'll judge you by their own experiences of your system, not by your reports.

General engineering lesson 2 - you don't have to solve every problem. Sometimes it's better/cheaper/faster to neutralize or work around the issue instead. You might not be able to build a different floor layout, but you can do things like transfer data in the background (not locking out the UI) and show progress 'holding' pages during long searches etc.

Oh and just for the record, I really have no idea how the back end of the iris system works - you are supposed to be seeing the analogy here...

Monday 1 December 2008

Change Control

With another December rolling around already, we head into that risky territory that we must navigate once a year - seasonal trading for many companies is picking up, yet now is the time when most support staff are trying as hard as possible to be on holiday. A tricky predicament - and what better time to talk about change control?

A lot of people - particularly fellow agilists - regard change control as a pointless, work-creationist, bureaucratic impediment to doing actual work. If it's irresponsibly applied, then I'd have to agree with them, but there are ways to implement change control that will add value to what you do without progress grinding to a halt amid kilometers of red tape.

Firstly, let's talk about why we'd bother in the first place. What's in it for us, and what's in it for the organization, to have some form of change control in place? Talking about it from this perspective (i.e. what we want to get out of it) means that whatever you do for change control is much more likely to deliver the benefits - because you have a goal in mind.

Here's what I look for in a change control process:

• The discipline of documenting a plan, even in rough steps, forces people to think through what they're doing and can uncover gotchas before they bite.

• Making the proposed change visible to other teams exposes any dependencies and technical/resource conflicts with parallel work.

• Making the proposed changes visible to the business makes sure the true impact to customers is taken into consideration and appropriate communication planned.

• Keeping simple records (such as plan vs actual steps taken) can contribute significantly to knowledge bases about the system and how to own it.

• Capturing basic information about the proposed change and circulating it to stakeholders makes sure balanced risk assessments are made when we need to decide when and how to implement something, and how much to spend on mitigations.

Ultimately, this all adds up to confidence in the activities the team are undertaking, and over time, will lead to less late nights and less reactive work.

And here are my rules of thumb for how change control should be implemented:

• Never let any process get in the way of doing the bloody obvious. If someone's on fire, you don't go and get the first aid manual and look up 'F' for fire.

• Change control can be granular, with stricter controls on more critical elements (like settlements), and a more flexible approach on lower impact or easier to restore elements (like content and feeds).

• Don't just take a off the shelf or copy another organization verbatim - this is the kind of thing that got change control the reputation it has - think about what you need and do something appropriate.

• Start small and grow up - it's easy to add more diligence where it proves necessary, but much more difficult to relax controls on areas where progress is pointlessly restricted.

So what do you actually do? As I said above, start off lightweight and cheap - a spreadsheet should do it, there isn't always the need for a huge workflow management database. Make a simple template and make sure you circulate it the way information is best disseminated in your organization (email, intranet, pinned on the wall - whatever gets it seen). Borrow ideas from your industry peers, but keep in mind the outcomes that best serve your circumstances. Most of all, identify the right stakeholders for each area of the system, appreciate the different requirements the applications under your stewardship have, and get into the habit of weighting risk and thinking before you act.

Here's to peace of mind - let's spend December at christmas parties, not postmortems!

A lot of people - particularly fellow agilists - regard change control as a pointless, work-creationist, bureaucratic impediment to doing actual work. If it's irresponsibly applied, then I'd have to agree with them, but there are ways to implement change control that will add value to what you do without progress grinding to a halt amid kilometers of red tape.

Firstly, let's talk about why we'd bother in the first place. What's in it for us, and what's in it for the organization, to have some form of change control in place? Talking about it from this perspective (i.e. what we want to get out of it) means that whatever you do for change control is much more likely to deliver the benefits - because you have a goal in mind.

Here's what I look for in a change control process:

• The discipline of documenting a plan, even in rough steps, forces people to think through what they're doing and can uncover gotchas before they bite.

• Making the proposed change visible to other teams exposes any dependencies and technical/resource conflicts with parallel work.

• Making the proposed changes visible to the business makes sure the true impact to customers is taken into consideration and appropriate communication planned.

• Keeping simple records (such as plan vs actual steps taken) can contribute significantly to knowledge bases about the system and how to own it.

• Capturing basic information about the proposed change and circulating it to stakeholders makes sure balanced risk assessments are made when we need to decide when and how to implement something, and how much to spend on mitigations.

Ultimately, this all adds up to confidence in the activities the team are undertaking, and over time, will lead to less late nights and less reactive work.

And here are my rules of thumb for how change control should be implemented:

• Never let any process get in the way of doing the bloody obvious. If someone's on fire, you don't go and get the first aid manual and look up 'F' for fire.

• Change control can be granular, with stricter controls on more critical elements (like settlements), and a more flexible approach on lower impact or easier to restore elements (like content and feeds).

• Don't just take a off the shelf or copy another organization verbatim - this is the kind of thing that got change control the reputation it has - think about what you need and do something appropriate.

• Start small and grow up - it's easy to add more diligence where it proves necessary, but much more difficult to relax controls on areas where progress is pointlessly restricted.

So what do you actually do? As I said above, start off lightweight and cheap - a spreadsheet should do it, there isn't always the need for a huge workflow management database. Make a simple template and make sure you circulate it the way information is best disseminated in your organization (email, intranet, pinned on the wall - whatever gets it seen). Borrow ideas from your industry peers, but keep in mind the outcomes that best serve your circumstances. Most of all, identify the right stakeholders for each area of the system, appreciate the different requirements the applications under your stewardship have, and get into the habit of weighting risk and thinking before you act.

Here's to peace of mind - let's spend December at christmas parties, not postmortems!

Subscribe to:

Posts (Atom)