It's nearly Christmas (again!) and I hope you're way too busy enjoying the best this season has to offer to be reading this! With any luck, the systems and people you look after will all be humming along just fine, leaving you able to spend plenty of time with family and friends.

At times like this, it can be easy to forget that there are a lot of people out there who don't have it as sweet as we do. Where running water is a luxury, we'd find it difficult getting sympathy for our lengthy outstanding bugs list or early morning release woes!

I'm no Bono, but I like to contribute somehow whenever I come across a cause I believe in, but doing something specifically at this time of year has the extra bonus of reminding you of exactly how lucky you are during what can be a time of excesses.

Now, this blog has always been about the free exchange of ideas - a place where I can capture my original thoughts and experiences from the work I do, in the hope that it will help others with the work that they do. Whatever else it is, it's never been a money making exercise - and that's why I've never before tried to monetize the content, despite a growing readership (hello to both of you).

However, as of today, keener-eyed observers will notice a Google Ads box lurking surreptitiously in the right hand panel, ready to corrupt my benevolent posts with it's raw, unbridled, marketing vivacity. But don't worry - I haven't sold out yet! Here is the plan...

I'll run Google AdSense for the coming year, and around this time next year, donate all the revenue to charity. Every cent, and a moderate top up from myself.

It is impractical to pick a charity 12 months ahead, but the money will be donated via Global Giving or Just Giving (or maybe both) so that you can keep me honest. I'm thinking about supporting something which helps to establish education in 3rd world countries - that appeals to my "help those who are prepared to help themselves" philosophy - but will take suggestions from the community.

As well as cheques being posted next December, you can expect a running total, say, quarterly - and heck, if things go really well maybe we'll have a mid-year checkpoint and make a donation then too.

So, Merry Christmas and all the best for 2009, and remember, if you see something that interests you on the right hand side then give it a click - it's for a good cause!

See you next year

Eachan

The things I should have learned from years of putting together people and technology to get successful product online. We’ll probably talk about strategy, distributed systems, agile development, webscale computing and of course how to manage those most complicated of all machines – the human being – in our quest to expose the most business value in the least expensive way.

Monday 22 December 2008

Sunday 21 December 2008

When is the right time to launch?

Ignoring commercial concerns such as marketing campaigns, complimentary events, and market conditions, this essentially comes down to weighing the quality/completeness of the product against getting to market sooner.

The quality over time to market side has been advocated a few times in recent episodes of Paul Boag's podcast, so I figured I would speak up for the other side. But before I do, let me just say that I don't think there is a right answer to this and, as Paul also conceded, it depends on if your application's success requires a land grab or not.

I am, by nature, an "early and often" man - and that's kind of a vote for time to market over quality. I say kind of because I think it would be more accurate to say that it's a vote for time to market over perfection.

For me, the "often" part is inextricable linked to the "early" part. If you can show a pattern of frequent, regular improvement and feature releases, then you can afford to ship with less on day one. Users can often be more forgiving when see things turned around quickly, and new things regularly appearing can even become a reason in itself to return to the site more often.

Quality is still a factor, but it isn't as black and white as I've been hearing it presented. In the early days of a new product, I think where you spend your time is more important than overall quality. You should be very confident in anything that deals with accounts, real money, or the custody of user's data before shipping. I would argue that getting those areas right for launch at the expense of other parts of the system is better than a more even systemwide standard of quality. You always have limited resources. Spend the most where it counts the most.

And finally, openness. Be truthful and transparent with your users. Start a blog about development progress and the issues you've run into. Provide feedback mechanisms, and actually get back to people who've taken the time to share their thoughts - with something material too, not just an automated thankyou. Send out proactive notifications ahead of impactful changes and after unplanned events. Stick 'beta' labels on things you're shipping early - it'll keep user's blood pressure down, and you might be surprised by how much of the community is prepared to help.

I am aware that I haven't actually answered the question that lends this post its title. I don't know if there even is an off-the-shelf answer, but I hope that I've at least given you some more ideas on how to make the right decision for yourself.

The quality over time to market side has been advocated a few times in recent episodes of Paul Boag's podcast, so I figured I would speak up for the other side. But before I do, let me just say that I don't think there is a right answer to this and, as Paul also conceded, it depends on if your application's success requires a land grab or not.

I am, by nature, an "early and often" man - and that's kind of a vote for time to market over quality. I say kind of because I think it would be more accurate to say that it's a vote for time to market over perfection.

For me, the "often" part is inextricable linked to the "early" part. If you can show a pattern of frequent, regular improvement and feature releases, then you can afford to ship with less on day one. Users can often be more forgiving when see things turned around quickly, and new things regularly appearing can even become a reason in itself to return to the site more often.

Quality is still a factor, but it isn't as black and white as I've been hearing it presented. In the early days of a new product, I think where you spend your time is more important than overall quality. You should be very confident in anything that deals with accounts, real money, or the custody of user's data before shipping. I would argue that getting those areas right for launch at the expense of other parts of the system is better than a more even systemwide standard of quality. You always have limited resources. Spend the most where it counts the most.

And finally, openness. Be truthful and transparent with your users. Start a blog about development progress and the issues you've run into. Provide feedback mechanisms, and actually get back to people who've taken the time to share their thoughts - with something material too, not just an automated thankyou. Send out proactive notifications ahead of impactful changes and after unplanned events. Stick 'beta' labels on things you're shipping early - it'll keep user's blood pressure down, and you might be surprised by how much of the community is prepared to help.

I am aware that I haven't actually answered the question that lends this post its title. I don't know if there even is an off-the-shelf answer, but I hope that I've at least given you some more ideas on how to make the right decision for yourself.

Friday 19 December 2008

Constraints and Creativity

Does the greatest creativity come from unlimited choice; a totally blank canvas with every possible option and no restrictions, or from difficult circumstances; where pressure is high, the problems are acute and all the easy avenues are closed?

History has proven necessity to be the mother of invention time and time again, and I've personally seen a lot of otherwise-excellent people become paralyzed when faced with too much opportunity.

The current economic circumstances are bringing this into somewhat sharp relief. From time to time I get approached by other entrepreneurs with an idea they want to develop - usually only a couple of times a year, but in the last couple of quarters I've seen half a dozen. There is something about this credit crunch...

Conditions are right for the sorts of things I've seen; internet based, self-service, person to person applications that look for efficiency by cutting out middlemen and using smarter fulfillment. They're all great ideas and I really wish I had the time to help them all out.

Returning to the point, would you get this kind thinking in times of plenty? Who knows, maybe something good will come out of this credit crunch after all.

History has proven necessity to be the mother of invention time and time again, and I've personally seen a lot of otherwise-excellent people become paralyzed when faced with too much opportunity.

The current economic circumstances are bringing this into somewhat sharp relief. From time to time I get approached by other entrepreneurs with an idea they want to develop - usually only a couple of times a year, but in the last couple of quarters I've seen half a dozen. There is something about this credit crunch...

Conditions are right for the sorts of things I've seen; internet based, self-service, person to person applications that look for efficiency by cutting out middlemen and using smarter fulfillment. They're all great ideas and I really wish I had the time to help them all out.

Returning to the point, would you get this kind thinking in times of plenty? Who knows, maybe something good will come out of this credit crunch after all.

Wednesday 17 December 2008

Tradeoffs - You're Doing It Wrong

Our time is always our scarcest resource, and most of the web companies I know are perpetually bristling with more ideas than they could ever manage to ship. We always wish we could do them all, but at the same time we know deep down that it isn't realistic. Under these circumstances, tradeoffs are an inevitable and perfectly normal part of life.

The secret to doing this well is in what things you weigh against each other when deciding how to spend the resources you have.

It's so easy to start down the slippery slope of trading features off against the underlying technologies that support them. Fall into this trap and you'll get a small surge of customer-facing things out quickly, but stay with the pattern (easy to do - as it does reward you well in the short term) and you'll soon find yourself paralyzed by technical debt; struggling against continuously increasing time to market and building on top of a shaky, unreliable platform.

Instead, try trading off a feature and it's supporting technology against another feature and it's supporting technology. This isn't a carte blanche ticket for building hopelessly over-provisioned systems - it's simply about setting yourself up in the most appropriate way for future requirements and scalability, rather than foregoing whatever platform work that would have been diligent and starting off behind the curve.

Trading features off against their underlying technology is not just short sighted, it's also unrealistic. You see, the fact is that all customer-facing features have to be maintained and supported anyway, no matter how badly we wish we could spend the time on other things. And right up front, when we first build each feature, we have the most control we're ever going to have over how difficult and expensive that's going to be for us.

This is a philosophy that has to make it all the way back to the start of the decision making process. When considering options, knowing the true cost of ownership is as vital as the revenue opportunity and the launch cost. Remember - you build something once, but you maintain it for life.

The secret to doing this well is in what things you weigh against each other when deciding how to spend the resources you have.

It's so easy to start down the slippery slope of trading features off against the underlying technologies that support them. Fall into this trap and you'll get a small surge of customer-facing things out quickly, but stay with the pattern (easy to do - as it does reward you well in the short term) and you'll soon find yourself paralyzed by technical debt; struggling against continuously increasing time to market and building on top of a shaky, unreliable platform.

Instead, try trading off a feature and it's supporting technology against another feature and it's supporting technology. This isn't a carte blanche ticket for building hopelessly over-provisioned systems - it's simply about setting yourself up in the most appropriate way for future requirements and scalability, rather than foregoing whatever platform work that would have been diligent and starting off behind the curve.

Trading features off against their underlying technology is not just short sighted, it's also unrealistic. You see, the fact is that all customer-facing features have to be maintained and supported anyway, no matter how badly we wish we could spend the time on other things. And right up front, when we first build each feature, we have the most control we're ever going to have over how difficult and expensive that's going to be for us.

This is a philosophy that has to make it all the way back to the start of the decision making process. When considering options, knowing the true cost of ownership is as vital as the revenue opportunity and the launch cost. Remember - you build something once, but you maintain it for life.

Saturday 13 December 2008

Failure Modes

Whenever we're building a product, we've got to keep in mind what might go wrong, rather than just catering mindlessly to the functional spec. That's because specifications are largely written for a parallel universe where everything goes as planned and nothing ever breaks; while we must write software that runs right here in this universe, with all it's unpredictability, unintended consequences, and poorly behaved users.

Oh and as I've said in the past, if you have a business owner that actually specs for failure modes, kiss them passionately now and never let them go. But for the rest of us, maybe it would help us keep failure modes in the forefront of our minds as we worked if we came up with some simplified categories to keep track of. How about internal, external, and human?

Internal

I'm not going to say too much about internal failure modes, because they are both the most commonly considered types and they have the most existing solutions out there.

You could sum up internal failures by imagining your code operating autonomously in a closed environment. What might go wrong? You are essentially catering for quality here, and we have all sorts of test environments and unit tests to combat defects we might accidentally introduce through our own artifacts.

External

The key difference between external and internal failure modes is precisely what I said above - you are imagining that your code is operating in perfect isolation. If you are reading this, then I sincerely hope you rolled your eyes at that thought.

Let's assume that integration is part of internal, and we only start talking external forces when our product is out there running online. What might go wrong?

Occasionally I meet teams that are pretty good detecting and reacting to external failures and it pleases me greatly. Let's consider some examples; what if an external price list that your system refers to goes down? How about if a service intended to validate addresses becomes a black hole? What if you lose your entire internet connection?

Those examples are all about blackouts - total and obvious removal of service - so things are conspicuous by their absence. For bonus points, how are you at spotting brownouts? That's when things are 'up' but still broken in a very critical way, and the results can sometimes cost you far more than a blackout, as they can go undetected for a while...

Easy example - you subscribe to a feed for up-to-the-minute foreign exchange rates. For performance reasons, you probably store the most recent values for each currency you use in a cache or database, and read it from there per transaction. What happens if you stop receiving the feed? You could keep transacting for a very long time before you notice, and you will have either disadvantaged yourself or your customers by using out of date rates - neither of which is desirable.

Perhaps the feed didn't even stop. Perhaps the schema changed, in which case you'd still see a regular drop of data if you were monitoring the consuming interface, but you'd have unusable data - or worse - be inserting the wrong values against each currency.

Human

Human failure modes are the least catered for in our profession, regardless of the fact they're just as inevitable and just as expensive. You could argue that 'human' is just another type of external failure, but I consider it fundamentally different due to one simple word - "oops".

To err is human and all that junk. We do stuff like set parameters incorrectly, turn off the wrong server, pull out the wrong disk, plug in the wrong cable, ignore system requirements etc - all with the best of intentions.

So what would happen if, say, a live application server is misconfigured to use a development database and then you unknowingly unleash real users upon it? You could spend a very long time troubleshooting it, or worse still it might actually work - and thinking about brownouts - how long will it be before you noticed? For users who'd attached to that node, where will all their changes be, and how will you merge that back into the 'real' live data?

Humans can also accidentally not do things which have consequences for our system too. Consider our feed example - perhaps we just forgot to renew the subscription, and so we're getting stale or no data even though the system has done everything it was designed to do. Hang on, who was in charge of updating those SSL certificates?

Perhaps we don't think about maintenance mistakes up front because whenever we build something, we always picture ourselves performing the operational tasks. And to us, the steps are obvious and we're performing them, in a simplified world in our heads, without any other distractions competing for our attention. Again - not real life.

And so...

All of these things can be monitored, tested for, and caught. In our forex example, you might check the age of the data every time you read the exchange rate value in preparation for a transaction, and fail it if it exceeds a certain threshold (or just watch the age in a separate process).

In our live server with test data example, you might mandate that systems and data sources state what mode they're in (test, live, demo, etc) in their connection string - better yet generate an alert if there is a state mismatch in the stack (or segment your network so communication is not possible).

The question isn't are there solutions; the question is how far is far enough?

As long as you think about failure modes in whatever way works for you, and make a pragmatic judgement on each risk using likelihood and impact to determine how many monitors and fail-safes it's worth building in, then you'll have done your job significantly better than the vast majority of engineers out there - and your customers will thank you for it with their business.

Oh and as I've said in the past, if you have a business owner that actually specs for failure modes, kiss them passionately now and never let them go. But for the rest of us, maybe it would help us keep failure modes in the forefront of our minds as we worked if we came up with some simplified categories to keep track of. How about internal, external, and human?

Internal

I'm not going to say too much about internal failure modes, because they are both the most commonly considered types and they have the most existing solutions out there.

You could sum up internal failures by imagining your code operating autonomously in a closed environment. What might go wrong? You are essentially catering for quality here, and we have all sorts of test environments and unit tests to combat defects we might accidentally introduce through our own artifacts.

External

The key difference between external and internal failure modes is precisely what I said above - you are imagining that your code is operating in perfect isolation. If you are reading this, then I sincerely hope you rolled your eyes at that thought.

Let's assume that integration is part of internal, and we only start talking external forces when our product is out there running online. What might go wrong?

Occasionally I meet teams that are pretty good detecting and reacting to external failures and it pleases me greatly. Let's consider some examples; what if an external price list that your system refers to goes down? How about if a service intended to validate addresses becomes a black hole? What if you lose your entire internet connection?

Those examples are all about blackouts - total and obvious removal of service - so things are conspicuous by their absence. For bonus points, how are you at spotting brownouts? That's when things are 'up' but still broken in a very critical way, and the results can sometimes cost you far more than a blackout, as they can go undetected for a while...

Easy example - you subscribe to a feed for up-to-the-minute foreign exchange rates. For performance reasons, you probably store the most recent values for each currency you use in a cache or database, and read it from there per transaction. What happens if you stop receiving the feed? You could keep transacting for a very long time before you notice, and you will have either disadvantaged yourself or your customers by using out of date rates - neither of which is desirable.

Perhaps the feed didn't even stop. Perhaps the schema changed, in which case you'd still see a regular drop of data if you were monitoring the consuming interface, but you'd have unusable data - or worse - be inserting the wrong values against each currency.

Human

Human failure modes are the least catered for in our profession, regardless of the fact they're just as inevitable and just as expensive. You could argue that 'human' is just another type of external failure, but I consider it fundamentally different due to one simple word - "oops".

To err is human and all that junk. We do stuff like set parameters incorrectly, turn off the wrong server, pull out the wrong disk, plug in the wrong cable, ignore system requirements etc - all with the best of intentions.

So what would happen if, say, a live application server is misconfigured to use a development database and then you unknowingly unleash real users upon it? You could spend a very long time troubleshooting it, or worse still it might actually work - and thinking about brownouts - how long will it be before you noticed? For users who'd attached to that node, where will all their changes be, and how will you merge that back into the 'real' live data?

Humans can also accidentally not do things which have consequences for our system too. Consider our feed example - perhaps we just forgot to renew the subscription, and so we're getting stale or no data even though the system has done everything it was designed to do. Hang on, who was in charge of updating those SSL certificates?

Perhaps we don't think about maintenance mistakes up front because whenever we build something, we always picture ourselves performing the operational tasks. And to us, the steps are obvious and we're performing them, in a simplified world in our heads, without any other distractions competing for our attention. Again - not real life.

And so...

All of these things can be monitored, tested for, and caught. In our forex example, you might check the age of the data every time you read the exchange rate value in preparation for a transaction, and fail it if it exceeds a certain threshold (or just watch the age in a separate process).

In our live server with test data example, you might mandate that systems and data sources state what mode they're in (test, live, demo, etc) in their connection string - better yet generate an alert if there is a state mismatch in the stack (or segment your network so communication is not possible).

The question isn't are there solutions; the question is how far is far enough?

As long as you think about failure modes in whatever way works for you, and make a pragmatic judgement on each risk using likelihood and impact to determine how many monitors and fail-safes it's worth building in, then you'll have done your job significantly better than the vast majority of engineers out there - and your customers will thank you for it with their business.

Tuesday 2 December 2008

Speed is in the Eye of the Beholder

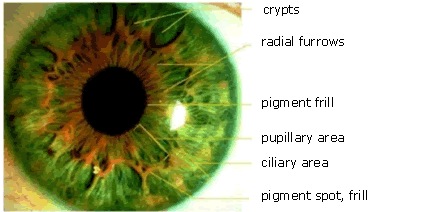

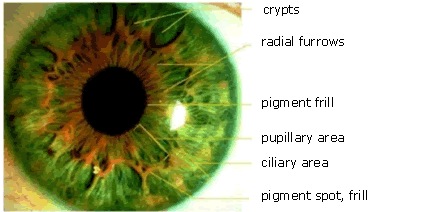

Yesterday I was coming through Heathrow again, and while I was waiting for my luggage to surprise me by actually turning up, I was ruminating on performance, user experience, and the iris scanner (old BBC link). For those who haven't had the pleasure, we're talking about a biometric border security system based on the unique patterns in an individual's eye, implemented at certain UK borders as an optional alternative to traverse passport security in a self-service way.

Flying internationally at least once a month for the last couple of years, I've been a regular user of the iris scanner since it's early trials. Like all new technology, it went through a period of unreliability and rapid change in its early days, but its been pretty good for a while now. Step into booth. Have picture of eyes taken. Enter the UK. Awesome.

The only problem is that...

It...

Is...

Just...

Too...

Slow...

Don't get me wrong - you don't have to queue up for it (yet) and you do spend less time overall at the border - it's just that, for a high tech biometric solution, it seems to take an awful long time to look me up once it snaps what it considers to be a usable image of my retinas. You know, in real time it isn't even that expensive - probably an average of about 5 or 6 seconds - but it's just long enough for you to want to keep moving, hesitate, grumble to yourself, briefly wonder if it's broken, and then the gates open up.

It occurred to me that maybe my expectations as a user were perhaps unfair, but then quick as a flash I realized - hey, I'm the user here, so don't I set the standard?

So that's when I started to think about how the performance of this system could be improved, and caught myself falling straight into rookie trap number 1:

I started making assumptions about the implementation and thinking about what solutions could be put in place. I figured there must be some processing time incurred when the retinal image is captured as the machine picks out the patterns in my eyes. That's a local CPU power constraint. Once my retinal patterns are converted into a machine-readable representation, there must be some kind of DB lookup to find a match. Once I've been uniquely identified, I imagine there will be some other data sources to be consulted - checking against the various lists we use to keep terrorists and whatnot at bay.

Well this all sounds very read intensive, so it's a good case for having lots of local replicas (the iris machines are spread out over a number of terminals). Each unique 'eye print' is rarely going to come through more often than days or weeks apart, so most forms of request caching won't help us much with that. Of course there is also write load - we've got to keep track of who crossed the border when and what the result was - but we can delay those without much penalty, as it is the reads that keep us standing in that booth. Maybe we could even periodically import our border security lists to a local service if we observe a significant network cost in checking against them for each individual scanned through the gate (assuming they are currently maintained remotely by other agencies).

Ignoring the fact that I'm making all sorts of horrible guesses about how this system currently works, these seem like reasonably sensible patterns to me, so what's the rookie trap?

Simply that I didn't start by understanding the basic problem I am trying to solve, instead rolling up my sleeves and diving straight into the technical complexities. In doing so, I might have overlooked a plain, simple solution and that's usually bad - why solve a problem in a complex, expensive way when a simple, cheap way will do just as well?

In this example, the problem is not that 5 seconds is an unacceptable time to process the image, compare the data against the registered users, and then pass all the additional border security checks - the problem is that 5 seconds feels like a long time when you're standing in a glass box waiting for it all to happen!

So what else might we have done instead of a major re-architecture of the iris back end? How about just lengthening the booth to form slightly more of a corridor, with the scanner at one end and the gates at the other? With this simple trick, it still takes 5 seconds before the gates can open, but it doesn't feel like it - you haven't been standing still waiting, there is a sensation of progress.

Same level of system performance, very different user experience.

General engineering lesson 1 - customer experience is king. Monitoring and other data driven metrics are important, but how it really looks and feels to your users matters way more than whatever you can prove with data. They'll judge you by their own experiences of your system, not by your reports.

General engineering lesson 2 - you don't have to solve every problem. Sometimes it's better/cheaper/faster to neutralize or work around the issue instead. You might not be able to build a different floor layout, but you can do things like transfer data in the background (not locking out the UI) and show progress 'holding' pages during long searches etc.

Oh and just for the record, I really have no idea how the back end of the iris system works - you are supposed to be seeing the analogy here...

Flying internationally at least once a month for the last couple of years, I've been a regular user of the iris scanner since it's early trials. Like all new technology, it went through a period of unreliability and rapid change in its early days, but its been pretty good for a while now. Step into booth. Have picture of eyes taken. Enter the UK. Awesome.

The only problem is that...

It...

Is...

Just...

Too...

Slow...

Don't get me wrong - you don't have to queue up for it (yet) and you do spend less time overall at the border - it's just that, for a high tech biometric solution, it seems to take an awful long time to look me up once it snaps what it considers to be a usable image of my retinas. You know, in real time it isn't even that expensive - probably an average of about 5 or 6 seconds - but it's just long enough for you to want to keep moving, hesitate, grumble to yourself, briefly wonder if it's broken, and then the gates open up.

It occurred to me that maybe my expectations as a user were perhaps unfair, but then quick as a flash I realized - hey, I'm the user here, so don't I set the standard?

So that's when I started to think about how the performance of this system could be improved, and caught myself falling straight into rookie trap number 1:

I started making assumptions about the implementation and thinking about what solutions could be put in place. I figured there must be some processing time incurred when the retinal image is captured as the machine picks out the patterns in my eyes. That's a local CPU power constraint. Once my retinal patterns are converted into a machine-readable representation, there must be some kind of DB lookup to find a match. Once I've been uniquely identified, I imagine there will be some other data sources to be consulted - checking against the various lists we use to keep terrorists and whatnot at bay.

Well this all sounds very read intensive, so it's a good case for having lots of local replicas (the iris machines are spread out over a number of terminals). Each unique 'eye print' is rarely going to come through more often than days or weeks apart, so most forms of request caching won't help us much with that. Of course there is also write load - we've got to keep track of who crossed the border when and what the result was - but we can delay those without much penalty, as it is the reads that keep us standing in that booth. Maybe we could even periodically import our border security lists to a local service if we observe a significant network cost in checking against them for each individual scanned through the gate (assuming they are currently maintained remotely by other agencies).

Ignoring the fact that I'm making all sorts of horrible guesses about how this system currently works, these seem like reasonably sensible patterns to me, so what's the rookie trap?

Simply that I didn't start by understanding the basic problem I am trying to solve, instead rolling up my sleeves and diving straight into the technical complexities. In doing so, I might have overlooked a plain, simple solution and that's usually bad - why solve a problem in a complex, expensive way when a simple, cheap way will do just as well?

In this example, the problem is not that 5 seconds is an unacceptable time to process the image, compare the data against the registered users, and then pass all the additional border security checks - the problem is that 5 seconds feels like a long time when you're standing in a glass box waiting for it all to happen!

So what else might we have done instead of a major re-architecture of the iris back end? How about just lengthening the booth to form slightly more of a corridor, with the scanner at one end and the gates at the other? With this simple trick, it still takes 5 seconds before the gates can open, but it doesn't feel like it - you haven't been standing still waiting, there is a sensation of progress.

Same level of system performance, very different user experience.

General engineering lesson 1 - customer experience is king. Monitoring and other data driven metrics are important, but how it really looks and feels to your users matters way more than whatever you can prove with data. They'll judge you by their own experiences of your system, not by your reports.

General engineering lesson 2 - you don't have to solve every problem. Sometimes it's better/cheaper/faster to neutralize or work around the issue instead. You might not be able to build a different floor layout, but you can do things like transfer data in the background (not locking out the UI) and show progress 'holding' pages during long searches etc.

Oh and just for the record, I really have no idea how the back end of the iris system works - you are supposed to be seeing the analogy here...

Monday 1 December 2008

Change Control

With another December rolling around already, we head into that risky territory that we must navigate once a year - seasonal trading for many companies is picking up, yet now is the time when most support staff are trying as hard as possible to be on holiday. A tricky predicament - and what better time to talk about change control?

A lot of people - particularly fellow agilists - regard change control as a pointless, work-creationist, bureaucratic impediment to doing actual work. If it's irresponsibly applied, then I'd have to agree with them, but there are ways to implement change control that will add value to what you do without progress grinding to a halt amid kilometers of red tape.

Firstly, let's talk about why we'd bother in the first place. What's in it for us, and what's in it for the organization, to have some form of change control in place? Talking about it from this perspective (i.e. what we want to get out of it) means that whatever you do for change control is much more likely to deliver the benefits - because you have a goal in mind.

Here's what I look for in a change control process:

• The discipline of documenting a plan, even in rough steps, forces people to think through what they're doing and can uncover gotchas before they bite.

• Making the proposed change visible to other teams exposes any dependencies and technical/resource conflicts with parallel work.

• Making the proposed changes visible to the business makes sure the true impact to customers is taken into consideration and appropriate communication planned.

• Keeping simple records (such as plan vs actual steps taken) can contribute significantly to knowledge bases about the system and how to own it.

• Capturing basic information about the proposed change and circulating it to stakeholders makes sure balanced risk assessments are made when we need to decide when and how to implement something, and how much to spend on mitigations.

Ultimately, this all adds up to confidence in the activities the team are undertaking, and over time, will lead to less late nights and less reactive work.

And here are my rules of thumb for how change control should be implemented:

• Never let any process get in the way of doing the bloody obvious. If someone's on fire, you don't go and get the first aid manual and look up 'F' for fire.

• Change control can be granular, with stricter controls on more critical elements (like settlements), and a more flexible approach on lower impact or easier to restore elements (like content and feeds).

• Don't just take a off the shelf or copy another organization verbatim - this is the kind of thing that got change control the reputation it has - think about what you need and do something appropriate.

• Start small and grow up - it's easy to add more diligence where it proves necessary, but much more difficult to relax controls on areas where progress is pointlessly restricted.

So what do you actually do? As I said above, start off lightweight and cheap - a spreadsheet should do it, there isn't always the need for a huge workflow management database. Make a simple template and make sure you circulate it the way information is best disseminated in your organization (email, intranet, pinned on the wall - whatever gets it seen). Borrow ideas from your industry peers, but keep in mind the outcomes that best serve your circumstances. Most of all, identify the right stakeholders for each area of the system, appreciate the different requirements the applications under your stewardship have, and get into the habit of weighting risk and thinking before you act.

Here's to peace of mind - let's spend December at christmas parties, not postmortems!

A lot of people - particularly fellow agilists - regard change control as a pointless, work-creationist, bureaucratic impediment to doing actual work. If it's irresponsibly applied, then I'd have to agree with them, but there are ways to implement change control that will add value to what you do without progress grinding to a halt amid kilometers of red tape.

Firstly, let's talk about why we'd bother in the first place. What's in it for us, and what's in it for the organization, to have some form of change control in place? Talking about it from this perspective (i.e. what we want to get out of it) means that whatever you do for change control is much more likely to deliver the benefits - because you have a goal in mind.

Here's what I look for in a change control process:

• The discipline of documenting a plan, even in rough steps, forces people to think through what they're doing and can uncover gotchas before they bite.

• Making the proposed change visible to other teams exposes any dependencies and technical/resource conflicts with parallel work.

• Making the proposed changes visible to the business makes sure the true impact to customers is taken into consideration and appropriate communication planned.

• Keeping simple records (such as plan vs actual steps taken) can contribute significantly to knowledge bases about the system and how to own it.

• Capturing basic information about the proposed change and circulating it to stakeholders makes sure balanced risk assessments are made when we need to decide when and how to implement something, and how much to spend on mitigations.

Ultimately, this all adds up to confidence in the activities the team are undertaking, and over time, will lead to less late nights and less reactive work.

And here are my rules of thumb for how change control should be implemented:

• Never let any process get in the way of doing the bloody obvious. If someone's on fire, you don't go and get the first aid manual and look up 'F' for fire.

• Change control can be granular, with stricter controls on more critical elements (like settlements), and a more flexible approach on lower impact or easier to restore elements (like content and feeds).

• Don't just take a off the shelf or copy another organization verbatim - this is the kind of thing that got change control the reputation it has - think about what you need and do something appropriate.

• Start small and grow up - it's easy to add more diligence where it proves necessary, but much more difficult to relax controls on areas where progress is pointlessly restricted.

So what do you actually do? As I said above, start off lightweight and cheap - a spreadsheet should do it, there isn't always the need for a huge workflow management database. Make a simple template and make sure you circulate it the way information is best disseminated in your organization (email, intranet, pinned on the wall - whatever gets it seen). Borrow ideas from your industry peers, but keep in mind the outcomes that best serve your circumstances. Most of all, identify the right stakeholders for each area of the system, appreciate the different requirements the applications under your stewardship have, and get into the habit of weighting risk and thinking before you act.

Here's to peace of mind - let's spend December at christmas parties, not postmortems!

Friday 21 November 2008

Root Cause Analysis

To help me kill some time at an airport (which seems to be my second job these days), let me reach into my wardrobe of soap-box issues and pick something out. Ah, root cause analysis, here we go.

In my opinion, proper root cause analysis is the most important part of any operational support process.

Having a professional, predictable response and the skills to restore service quickly are critical - but you have to ensure that your support processes don't stop there. If they do, then you're simply doomed to let history repeat itself, and this means more downtime, more reactive firefighting, and less satisfied customers.

Good root cause analysis takes into account the entire event - systemwide conditions, the teams response, the available data, the policies applied - not just the technical issue which triggered the fault, and looks for ways to reduce the likelihood of recurrence.

Doing root cause analysis properly can be expensive, because you don't need to get to the bottom of why it happened this time, it's why it keeps happening, and why the system was susceptible to the issue in the first place that you need to uncover to really add future value. Think of the time spent on it as an investment in availability, freeing up your team to work more strategically (as well as enjoy their jobs more), and happier users (which oddly seems to make happier engineers).

But what you learn by doing this isn't really worth the time you spend on it without the organizational discipline to follow up with real changes. If you're truly tracing issues back to their root, you'd be surprised how many are the result of a chain of events that could stretch right back to the earliest phases in projects. This needs commitment.

If you make money out of responding to problems then you'll probably want to ignore my advice. There is a whole industry of IT suppliers whose core business lives here, and while it's an admirable pursuit, don't take the habit with you when you join an internal team!

In my opinion, proper root cause analysis is the most important part of any operational support process.

Having a professional, predictable response and the skills to restore service quickly are critical - but you have to ensure that your support processes don't stop there. If they do, then you're simply doomed to let history repeat itself, and this means more downtime, more reactive firefighting, and less satisfied customers.

Good root cause analysis takes into account the entire event - systemwide conditions, the teams response, the available data, the policies applied - not just the technical issue which triggered the fault, and looks for ways to reduce the likelihood of recurrence.

Doing root cause analysis properly can be expensive, because you don't need to get to the bottom of why it happened this time, it's why it keeps happening, and why the system was susceptible to the issue in the first place that you need to uncover to really add future value. Think of the time spent on it as an investment in availability, freeing up your team to work more strategically (as well as enjoy their jobs more), and happier users (which oddly seems to make happier engineers).

But what you learn by doing this isn't really worth the time you spend on it without the organizational discipline to follow up with real changes. If you're truly tracing issues back to their root, you'd be surprised how many are the result of a chain of events that could stretch right back to the earliest phases in projects. This needs commitment.

If you make money out of responding to problems then you'll probably want to ignore my advice. There is a whole industry of IT suppliers whose core business lives here, and while it's an admirable pursuit, don't take the habit with you when you join an internal team!

Wednesday 19 November 2008

The Confidence-o-Meter

A while back we had a project that just seemed destined to face every type of adversity right from the outset (we've all had at least one). Being a new line of business for us, we didn't even have a customer team when work needed to begin! It was going downhill, and with strict regulatory deadlines to meet, we needed to get it back on track. Additional complications arose because the team was new, and as such were still gelling together as they tackled the work. Let's throw in an unusually high dosage of the usual incomplete specifications, changing requirements, and unclear ownership that regularly plague software projects and you have a recipe for a pretty epic train smash.

They say necessity is the mother of invention (Plato?) and there was certainly no shortage of necessity here, so we got on with some serious invention.

The Problem

We needed a way to bring the issues out in the open, in a way that a newly forming team can take ownership of them without accusation and defensiveness creeping in. More urgently still, we had conflicting views on the status of each of the workstreams.

It seemed sensible to start by simply getting in the same room and getting on the same page. To give the time some basic structure, we borrowed some concepts from the planning poker process. There were some basic ideas that made sense for us - getting the team together around a problem, gathering opinion in a way that prevents strong personalities dominating the outcome, and using outliers to zero in on hidden information. As an added bonus, the quasi-familiarity of the process gave the team a sense of purpose and went some way to dispel hostility in a high pressure environment.

The Solution

We started by scheduling a weekly session and sticking to it. Sounds simple, but when the world is coming down around your ears, it is too easy to get caught up in all the reactivity and not make the space to think through what you're doing.

We set aside some time at the end of each week, and our format for the session was fairly simple:

• All the members of the delivery team state their level of confidence that the project will hit its deadline by calling out a number between 1 and 10, 1 being definitely not, 10 being definitely so.

• We record the numbers, then give the lowest and highest numbers an opportunity to speak, uncovering what they know that makes them so much more or less confident than the rest of us. This way the whole group learned about (or dispelled) issues and opportunities they may have been unaware of.

• In light of the new information from the outliers, everyone has an opportunity to revise their confidence estimate. This is recorded in a spreadsheet which lends this post its title.

• Finally we took some time to talk over the most painful issues or obvious opportunities in the project and the team, then picked the most critical one and committed to changing it over the coming week. We also reviewed what we promised ourselves we'd change last week.

Through this discipline the team made, in real time, a bunch of very positive changes to themselves and how they work together without having to stop working and reorganize. We also had a very important index that we could use to gauge exactly how worried we should be - which is pretty important from a risk mitigation perspective. The trend was important too - we were able to observe confidence rise over time, and respond rapidly to the dips which indicated that the team had encountered something that they were worried would prevent them from delivering.

The Outcome

This exercise gained us a much more stable view of a chaotic process, and let us start picking off improvements as we worked. By making the time to do this and being transparent with the discussions and decisions, the team felt more confident and in control of their work - which always helps morale.

Because we were able to give the business a coherent, accurate assessment of where we were at, we were able to confidently ask for the right support - it was easy to show where the rest of the organization could help, and demonstrate the impact if they didn't meet their obligations to us.

In summary, we got our issues out in the open and got our project back on the rails. And by the time we got together for our post-implimentation retrospective, we were pleasantly surprised by how many of our most critical problems we'd already identified and fixed. If you're in a tough spot with a significant piece of work and a fixed deadline, consider giving something like this a try - I think it will work alongside any development methodology.

They say necessity is the mother of invention (Plato?) and there was certainly no shortage of necessity here, so we got on with some serious invention.

The Problem

We needed a way to bring the issues out in the open, in a way that a newly forming team can take ownership of them without accusation and defensiveness creeping in. More urgently still, we had conflicting views on the status of each of the workstreams.

It seemed sensible to start by simply getting in the same room and getting on the same page. To give the time some basic structure, we borrowed some concepts from the planning poker process. There were some basic ideas that made sense for us - getting the team together around a problem, gathering opinion in a way that prevents strong personalities dominating the outcome, and using outliers to zero in on hidden information. As an added bonus, the quasi-familiarity of the process gave the team a sense of purpose and went some way to dispel hostility in a high pressure environment.

The Solution

We started by scheduling a weekly session and sticking to it. Sounds simple, but when the world is coming down around your ears, it is too easy to get caught up in all the reactivity and not make the space to think through what you're doing.

We set aside some time at the end of each week, and our format for the session was fairly simple:

• All the members of the delivery team state their level of confidence that the project will hit its deadline by calling out a number between 1 and 10, 1 being definitely not, 10 being definitely so.

• We record the numbers, then give the lowest and highest numbers an opportunity to speak, uncovering what they know that makes them so much more or less confident than the rest of us. This way the whole group learned about (or dispelled) issues and opportunities they may have been unaware of.

• In light of the new information from the outliers, everyone has an opportunity to revise their confidence estimate. This is recorded in a spreadsheet which lends this post its title.

• Finally we took some time to talk over the most painful issues or obvious opportunities in the project and the team, then picked the most critical one and committed to changing it over the coming week. We also reviewed what we promised ourselves we'd change last week.

Through this discipline the team made, in real time, a bunch of very positive changes to themselves and how they work together without having to stop working and reorganize. We also had a very important index that we could use to gauge exactly how worried we should be - which is pretty important from a risk mitigation perspective. The trend was important too - we were able to observe confidence rise over time, and respond rapidly to the dips which indicated that the team had encountered something that they were worried would prevent them from delivering.

The Outcome

This exercise gained us a much more stable view of a chaotic process, and let us start picking off improvements as we worked. By making the time to do this and being transparent with the discussions and decisions, the team felt more confident and in control of their work - which always helps morale.

Because we were able to give the business a coherent, accurate assessment of where we were at, we were able to confidently ask for the right support - it was easy to show where the rest of the organization could help, and demonstrate the impact if they didn't meet their obligations to us.

In summary, we got our issues out in the open and got our project back on the rails. And by the time we got together for our post-implimentation retrospective, we were pleasantly surprised by how many of our most critical problems we'd already identified and fixed. If you're in a tough spot with a significant piece of work and a fixed deadline, consider giving something like this a try - I think it will work alongside any development methodology.

Monday 17 November 2008

Cloud Computing Isn't...

Thought for the day - when does a new idea gain enough structure to graduate from meme to tangible concept? Is there some quorum of 'experts' that need to agree on its shape? Perhaps we need a minimum number of books written on the topic, or a certain number of vendors packaging the idea up for sale? Or maybe it is as simple as all of us trying it our own way for long enough for observable patterns to emerge?

We might have already crossed this bridge with cloud computing thanks to the accelerated uptake of robust platforms such as EC2 and App Engine (and the adherence to theme of newer offerings like Azure), but there is still a lot of residual confusion that we might start to mop up if we were so inclined.

The first thing we might stop doing is retrospectively claiming any sort of non-local activity as cloud computing. What's that? You've been using Gmail or Hotmail for years? No. sorry. You are not an ahead-of-the-curve early adopter, you are just a guy who has been using a free web based email system for a while.

Before the inevitable torrent of angry emails rains down upon my inbox, let's pause to think about what we're trying to achieve here. Does classifying the likes of Hotmail and - well, insert your favorite SaaS here - as cloud computing help or hinder the adoption and development of cloud technology? I think we probably establish these analogies because we believe that the familiarity we create by associating a trusted old favorite with a radical new concept may add comfort to perceived risks. But what about the downside of such a broad classification?

These systems are typically associated with a very narrow band of functionality (for example, sending and receiving email or storing and displaying photos) and are freely available (supported by advertising or other 2nd order revenue). They tend to lack the flexibility, identity, and SLA that an enterprise demands. This analogy may well be restricting adoption in the popular mind. Besides, where do you draw the line? Reading this blog? Clicking 'submit' on a web form? Accessing a resource in another office on your corporate WAN? I'm not knocking anyone's SaaS, in fact the noble pursuits that are our traditional online hosted email and storage systems have been significant contributing forces in the development of the platforms that made the whole cloud computing idea possible.

So, if a lot of our common everyday garden variety SaaS != a good way to talk about cloud computing, then what is?

Let's consider cloud computing from the perspective of the paradigm shift we're trying to create. How about cloud computing as taking resources (compute power and data) typically executed and stored in the corporate-owned datacenter, and deploying them into a shared (but not necessarily public) platform which abstracts access to, and responsibility for, the lower layers of computing.

That may well be the winner of Most Cumbersome Sentence 2008, but I feel like it captures the essence to a certain degree. Let's test our monster sentence against some of the other attributes of cloud computing - again from the perspective of what you're actually doing in a commercial and operational sense when you build a system on a cloud platform:

• Outsourcing concern over cooling, power, bandwidth, and all the other computer room primitives.

• Outsourcing the basic maintenance of the underlying operating systems and hardware.

• Converting a fixed capital outlay into a variable operational expense.

• Moving to a designed ignorance of the infrastructure (from a software environment perspective).

• Leveraging someone else's existing investment in capacity, reach, availability, bandwidth, and CPU power.

• Running a system in which cost of ownership can grow and shrink inline with it's popularity.

I think talking about the cloud in this way not only tells us what it is, but also a little about what we can do with it and why we'd want to. If you read this far and still disagree, then enable your caps lock and fire away!

We might have already crossed this bridge with cloud computing thanks to the accelerated uptake of robust platforms such as EC2 and App Engine (and the adherence to theme of newer offerings like Azure), but there is still a lot of residual confusion that we might start to mop up if we were so inclined.

The first thing we might stop doing is retrospectively claiming any sort of non-local activity as cloud computing. What's that? You've been using Gmail or Hotmail for years? No. sorry. You are not an ahead-of-the-curve early adopter, you are just a guy who has been using a free web based email system for a while.

Before the inevitable torrent of angry emails rains down upon my inbox, let's pause to think about what we're trying to achieve here. Does classifying the likes of Hotmail and - well, insert your favorite SaaS here - as cloud computing help or hinder the adoption and development of cloud technology? I think we probably establish these analogies because we believe that the familiarity we create by associating a trusted old favorite with a radical new concept may add comfort to perceived risks. But what about the downside of such a broad classification?

These systems are typically associated with a very narrow band of functionality (for example, sending and receiving email or storing and displaying photos) and are freely available (supported by advertising or other 2nd order revenue). They tend to lack the flexibility, identity, and SLA that an enterprise demands. This analogy may well be restricting adoption in the popular mind. Besides, where do you draw the line? Reading this blog? Clicking 'submit' on a web form? Accessing a resource in another office on your corporate WAN? I'm not knocking anyone's SaaS, in fact the noble pursuits that are our traditional online hosted email and storage systems have been significant contributing forces in the development of the platforms that made the whole cloud computing idea possible.

So, if a lot of our common everyday garden variety SaaS != a good way to talk about cloud computing, then what is?

Let's consider cloud computing from the perspective of the paradigm shift we're trying to create. How about cloud computing as taking resources (compute power and data) typically executed and stored in the corporate-owned datacenter, and deploying them into a shared (but not necessarily public) platform which abstracts access to, and responsibility for, the lower layers of computing.

That may well be the winner of Most Cumbersome Sentence 2008, but I feel like it captures the essence to a certain degree. Let's test our monster sentence against some of the other attributes of cloud computing - again from the perspective of what you're actually doing in a commercial and operational sense when you build a system on a cloud platform:

• Outsourcing concern over cooling, power, bandwidth, and all the other computer room primitives.

• Outsourcing the basic maintenance of the underlying operating systems and hardware.

• Converting a fixed capital outlay into a variable operational expense.

• Moving to a designed ignorance of the infrastructure (from a software environment perspective).

• Leveraging someone else's existing investment in capacity, reach, availability, bandwidth, and CPU power.

• Running a system in which cost of ownership can grow and shrink inline with it's popularity.

I think talking about the cloud in this way not only tells us what it is, but also a little about what we can do with it and why we'd want to. If you read this far and still disagree, then enable your caps lock and fire away!

Tuesday 11 November 2008

My agile construction yard

I have been successfully practicing agile for quite a while now, and I've always believed that, given a pragmatic application of the principles behind it, it can be used to manage any process. Mind you, having only ever tried to deliver software with agile, this remained personally unproven. Gauntlet?

So I figured that if I am going to keep going on about it, I am going to have to put my money (and my house) where my mouth is. So I did, and this is the story...

The requirements

I wanted a bunch of work done on my house - extending a room, replacing the whole fence line, building a new retaining wall, laying a stonework patio, new roof drainage, building a new BBQ area, and some interior layout changes - and I thought it's now or never. I spoke to the builder and told him all about agile, lean thinking, project management practices like SCRUM and XP, and how it can benefit both of us in delivering House 2.0. He asked if he could speak to the responsible adult who looks after me. "Great, a waterfall builder" I say to myself as I try not to be offended by the 'responsible adult' quip.

But we strike a deal; he's going to do big up front drawings and a quote, and we'll proceed my way at my own risk and responsibility. The game is on.

The first thing we do is run through all the things I want done, which ones are most important to me, and roughly how I want them all to look. I guess you could call this the vision setting. Then my contractor asks me the few big questions; the materials I want to use, budget, and when I want it ready by. He makes some high level estimations on time and cost, based on which I rearrange my priorities to get a few quick wins. We have a backlog.

The project

Our first 'sprint' is the fence line. We come across our first unknown already - the uprights that hold the fence into the ground are concreted in and we either have to take twice as long to tear them out, or build the new fence on the old posts. Direct contact throughout the process and transparency of information ensures that we make the decision together, as customer and delivery team, so that neither of us is left with the consequences of unforeseen situations. I want the benefit of new posts so I'm quite happy to eat the costs put forward.

Next we do the retaining wall, and we have a quick standup to go over the details - we need to decide on a few details up exact height and the type of plants growing across the top. Since the fence has been done I go with some sandy bricks that match the uprights and the wall is constructed without incident. The next thing we're going to tackle is the BBQ area; however, beyond that the roadmap calls for the room extension and so we need to apply for planning consent in order to get the approval in time. Agile doesn't mean no paperwork and no planning, it means doing just enough just in time for when you need it.

Now we hit our first dependency - the patio must be laid first before we can build the BBQ area. That's cool, and through our brief daily catchups, we come up with an ideal layout and pick out some nifty blocks. A bit of bad weather slows down the cementing phase slightly, but we're expecting that - this is England. We use the opportunity to draft some drawings for the room extension and get the consent application lodged.

It's BBQ building time. I've been thinking about it since we started the project, and I decided I wanted to change it. The grill was originally going up against one wall, but wouldn't it be much more fun if it was right in the middle so everyone could stand 360 around it and grill their own meat? You bet it would. We built a couple of examples out of loose bricks (prototypes?) and then settled on a final design. It takes a bit more stone than the original idea, but it's way more awesome.

Then our project suffered it's first major setback - the planning consent process uncovers that a whole lot of structural reinforcement will be needed if they're going to approve the extension. That pretty much triples the cost of adding the extra space. Is it still worth it at triple price? Not to me. Lucky we didn't invest in a lot of architect's drawings and interior design ideas, they'd be wasted now (specifications are inventory and inventory is a liability). So we start talking about alternatives, and come up with a plan to create the new space as storage and wardrobes - not exactly what I had in mind up front, but at less than half the original cost it still delivers 'business value'.

The retrospective

So how did it all turn out? Well, as a customer, I felt involved and informed throughout the whole process, and the immediate future was usually quite predictable. Throughout the project I had the opportunity to adjust and refine my requirements as I saw the work progress, and I always made informed tradeoffs whenever issues arose. I am happy, the builder is happy, and I got exactly what I wanted - even though what I really wanted ended up quite different to what I thought I wanted when we started.

Oh and if anyone wants a good building contractor in Surrey...

So I figured that if I am going to keep going on about it, I am going to have to put my money (and my house) where my mouth is. So I did, and this is the story...

The requirements

I wanted a bunch of work done on my house - extending a room, replacing the whole fence line, building a new retaining wall, laying a stonework patio, new roof drainage, building a new BBQ area, and some interior layout changes - and I thought it's now or never. I spoke to the builder and told him all about agile, lean thinking, project management practices like SCRUM and XP, and how it can benefit both of us in delivering House 2.0. He asked if he could speak to the responsible adult who looks after me. "Great, a waterfall builder" I say to myself as I try not to be offended by the 'responsible adult' quip.

But we strike a deal; he's going to do big up front drawings and a quote, and we'll proceed my way at my own risk and responsibility. The game is on.

The first thing we do is run through all the things I want done, which ones are most important to me, and roughly how I want them all to look. I guess you could call this the vision setting. Then my contractor asks me the few big questions; the materials I want to use, budget, and when I want it ready by. He makes some high level estimations on time and cost, based on which I rearrange my priorities to get a few quick wins. We have a backlog.

The project

Our first 'sprint' is the fence line. We come across our first unknown already - the uprights that hold the fence into the ground are concreted in and we either have to take twice as long to tear them out, or build the new fence on the old posts. Direct contact throughout the process and transparency of information ensures that we make the decision together, as customer and delivery team, so that neither of us is left with the consequences of unforeseen situations. I want the benefit of new posts so I'm quite happy to eat the costs put forward.

Next we do the retaining wall, and we have a quick standup to go over the details - we need to decide on a few details up exact height and the type of plants growing across the top. Since the fence has been done I go with some sandy bricks that match the uprights and the wall is constructed without incident. The next thing we're going to tackle is the BBQ area; however, beyond that the roadmap calls for the room extension and so we need to apply for planning consent in order to get the approval in time. Agile doesn't mean no paperwork and no planning, it means doing just enough just in time for when you need it.

Now we hit our first dependency - the patio must be laid first before we can build the BBQ area. That's cool, and through our brief daily catchups, we come up with an ideal layout and pick out some nifty blocks. A bit of bad weather slows down the cementing phase slightly, but we're expecting that - this is England. We use the opportunity to draft some drawings for the room extension and get the consent application lodged.

It's BBQ building time. I've been thinking about it since we started the project, and I decided I wanted to change it. The grill was originally going up against one wall, but wouldn't it be much more fun if it was right in the middle so everyone could stand 360 around it and grill their own meat? You bet it would. We built a couple of examples out of loose bricks (prototypes?) and then settled on a final design. It takes a bit more stone than the original idea, but it's way more awesome.

Then our project suffered it's first major setback - the planning consent process uncovers that a whole lot of structural reinforcement will be needed if they're going to approve the extension. That pretty much triples the cost of adding the extra space. Is it still worth it at triple price? Not to me. Lucky we didn't invest in a lot of architect's drawings and interior design ideas, they'd be wasted now (specifications are inventory and inventory is a liability). So we start talking about alternatives, and come up with a plan to create the new space as storage and wardrobes - not exactly what I had in mind up front, but at less than half the original cost it still delivers 'business value'.

The retrospective

So how did it all turn out? Well, as a customer, I felt involved and informed throughout the whole process, and the immediate future was usually quite predictable. Throughout the project I had the opportunity to adjust and refine my requirements as I saw the work progress, and I always made informed tradeoffs whenever issues arose. I am happy, the builder is happy, and I got exactly what I wanted - even though what I really wanted ended up quite different to what I thought I wanted when we started.

Oh and if anyone wants a good building contractor in Surrey...

Friday 7 November 2008

Cost in the Cloud

Cost is slated as benefit number 1 in most of the cloud fanboy buzz, and they're mostly right, usage-based and CPU-time billing models do mean you don't have tons of up front capital assets to buy - but that's not the same thing as saying all you cost problems are magically solved. You should still be concerned about cost - except now you're thinking about expensive operations and excess load.

Code efficiency sometimes isn't as acute a concern on a traditional hardware platform because you have to buy all the computers you'll need to meet peak load, and keep them running even when you're not at peak. This way you usually have an amount of free capacity floating around to absorb less-than-efficient code, and of course when you're at capacity there is a natural ceiling right there anyway.

Not so in the cloud. That runaway process is no longer hidden away inside a fixed cost, it is now directly costing you, for example, 40c an hour. If that doesn't scare you, then consider it as $3504 per year - that's for once instance, how about a bigger system of 10 or 15 instances? Now you're easily besting $35K and $52K for a process that isn't adding proportionate (or at worst, any) value to your business.

Yikes. So stay on guard against rogue process, think carefully about regularly scheduled jobs, and don't create expensive operations that are triggered by cheap events (like multiple reads from multiple databases for a simple page view) if you can avoid it. When you are designing a system to run on a cloud platform, your decisions will have a significant impact on the cost of running the software.

Code efficiency sometimes isn't as acute a concern on a traditional hardware platform because you have to buy all the computers you'll need to meet peak load, and keep them running even when you're not at peak. This way you usually have an amount of free capacity floating around to absorb less-than-efficient code, and of course when you're at capacity there is a natural ceiling right there anyway.

Not so in the cloud. That runaway process is no longer hidden away inside a fixed cost, it is now directly costing you, for example, 40c an hour. If that doesn't scare you, then consider it as $3504 per year - that's for once instance, how about a bigger system of 10 or 15 instances? Now you're easily besting $35K and $52K for a process that isn't adding proportionate (or at worst, any) value to your business.

Yikes. So stay on guard against rogue process, think carefully about regularly scheduled jobs, and don't create expensive operations that are triggered by cheap events (like multiple reads from multiple databases for a simple page view) if you can avoid it. When you are designing a system to run on a cloud platform, your decisions will have a significant impact on the cost of running the software.

Monday 3 November 2008

Eachan's Famous Interview Questions - Part II

A while back I posted some engineering manager/leader interview questions that I frequently use - designed to test how someone think, what their priorities are, and how they'd approach the job - rather than whether or not they can do the job at all. As I said back then, if you're at a senior level and you're testing to see if someone is capable of the basic job all, then you're doing it wrong (rely on robust screening at the widest point - your time is valuable).

Like everything else, this is subject to continuous improvement (agile interviewing - we're getting better at it by doing it) and with more repetition you tend to develop more ways of sizing people up in a 1 hour meeting. So here is iteration 2:

1. What is the role of a project leader? Depending on your favorite SDLC, that might be a project manager, SCRUM master, or team leader - but what you're looking for is a distinction between line management (the maintenance of the team) and project management (the delivery of the work).

[You might not make such distinctions in your organization, it is important to note that all these questions are intended to highlight what an individuals natural style is, not to outline a 'right' way to do it.]

2. Walk through the key events in the SDLC and explain the importance of each step. It is unlikely any candidate (except maybe an internal applicant) is going to nail down every detail of your SDLC, but what you're hoping to see is a solid, basic understanding of how ideas are converted into working software. It seems overly simple, but you'd be surprised how many people, even those who have been in the industry many years, are really uneasy about this. Award extra credit for 'importances' that benefit the team as well as the business (for example - product demos are good for the team's morale etc).

3. Who is in a team? Another dead simple one, and what you are testing for is engagement, inclusion, and transparency. Everyone will tell you developers, and usually testers, but do they include NFRs like architects? Supporting trades like business analysts and project managers? How about the customer him/herself?

4. What is velocity, how would you calculate it, and why would you want to know? Their ability to judge what their team is capable of is the key factual basis to the promises they'll make and how they'll monitor teams performance and be able to help them improve over iterations.